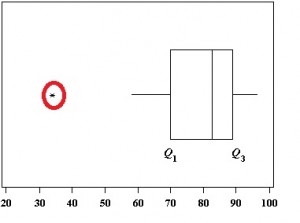

129-132.) > #Alternative approach using Lund Test 1975, "An Approximate Test for Outliers in Linear Models", Technometrics, vol. 1975, "Tables for An Approximate Test for Outliers in Linear Models", Technometrics, vol. You can then use stats to calculate critical cut off values, here using the Lund Test (See Lund, R. It's often most usefull to examine the data graphically (you're brain is much better at spotting outliers than maths is) > #Use Boxplot to Review the Data

> testout$X1 testout$X2 testout$Y testout I'm copying some of this from a Stackoverflow question I've previously answered which has some example R codeįirst, we'll create some data, and then taint it with an outlier > testout #Taint the Data (I know you said it's not a regression problem, so this might not help you, sorry !) My first response would be that if you can do multivariate regression on the data, then to use the residuals from that regression to spot outliers. Without some sort of distributional assumption that will let you turn it into a probabilistic classification problem, or at least some rotation that lets you separate your space into "noise dimensions" and "informative dimensions", I think that the geometry of high-dimensional spaces is going to prohibit any easy - or at least robust - identification of outliers. If you're defining an outlier as "a point with a comparatively large distance function relative to the others", and all your distance functions are beginning to converge because you're in a high-dimensional space, well, you're in trouble. The issue is that distance functions tend to converge to very large values very quickly as you increase dimensionality, including distances derived from distributions. My feeling is that you should direct your attention to outliers that greatly affect the parameters you are interested in estimating and not care so much about others that don't.įor moderate dimensions, like 3, then some sort of kernel cross-validation technique as suggested elsewhere seems reasonable and is the best I can come up with.įor higher dimensions, I'm not sure that the problem is solvable it lands pretty squarely into 'curse-of-dimensionality' territory. Then the contours of constant influence tell you the direction that is extreme with respect to the estimate of this parameter and hence tell you where in the n-dimensional space to look for the outlier.įor more you can look at my 1983 paper in the American Journal of Mathematical and Management Sciences titled "The influence function and its application to data validation." In data validation we wanted to look for outliers that affected the intended use of the data. Rather than go to the trouble of doing the calculation of the two estimates and taking the difference, often you can derive a formula for it. It essentially measures the difference between the parameter estimate with the point in the calculation and with the point left out. The influence function is a function of the point you are at in n-dimensional space and the parameter. Influence functions were introduced by Hampel as a tool in robust estimation and Mallows wrote a nice unpublished paper advocating their use. With bivariate data they can unduly influence the estimated correlation coefficient and in three or more dimensions the multiple correlation coefficient. Now in regression, the outliers-like leverage points-could have large effects on the slope parameters in the model.

Robust estimators downweight and accommodate outliers but they do not formally test for them. I would ask why do you care about the outlier? In estimating a mean they can have a great deal of influence on that estimate. I think that looking for every type of outlier is futile and counterproductive. But what metric should we use to define extreme for the outlier? There are many choices. In multivariate analysis it is an observation removed from the bulk of the data. In one dimension an outlier is either an extremely large or an extremely small value. I first saw this idea in Gnanadesikan's multivariate book. I didn't see anybody mention influence functions.

0 kommentar(er)

0 kommentar(er)